Author: Ron Wilson

Many embedded designs must ensure that they work correctly. Failures can cause irreparable damage to people or property. Until recently, the problem was solved through careful design and hardware reliability: If the software and logic are correct and there is no hardware failure, the system will work properly.

However, today, we live in an era of computer network space. If your system is to work, then you must assume that from annoying hackers to criminal gangs, even the owner of a lab that spends a lot of government money will attack it. To be able to protect your system, you must determine what you can trust - and ultimately who it is. This is not a simple task. Some people think that it is even impossible to achieve. However, you must do it.

Define a hierarchical structure

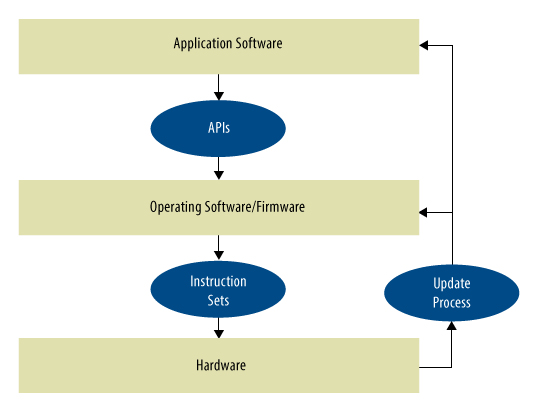

The question “Can I trust my system?†is inherently difficult to measure. In order to be able to grasp the key to this problem, we need to divide the basic big problem into many smaller but more difficult problems. This is usually solved by separating the systems on well-defined boundaries: the most common ones are the application software we use; the operating system, boot code, and firmware; and the hardware. In general, if we can define the interfaces between these layers—and thus define what we can trust at each level—if we can trust each layer of the design, we can trust the system (Figure 1).

Figure 1 divides the system into layers, showing the definition of a trusted interface.

Trust can only be inclusive. If your target software is very good, you must also trust the operating system (OS) so that it responds correctly to its application program interface without damaging your code. In this regard, you must believe that there is no malicious code that may damage the OS in the boot loader, and there is no Trojan horse in the hardware that may control the system. Some architects describe this recursive problem as finding a root of trust. However, this metaphor may be overly optimistic. During the security system panel discussion at the Design Automation Conference (DAC) in June, Vincent Zimmer, Intel’s senior principal engineer, mentioned a spokesperson's fictional story that the Earth is a plate on the back of a turtle. Asked where the turtle stood, the spokesperson replied, "It is a turtle; there has been a turtle below."

Ultimately - if recursion ends up somewhere, credence does not end in a design, nor a method, but engineers, humans themselves. At least some experts think so.

Trusted source

All of this poses a significant problem: How can you trust a layer in your design? The term "trusted root" means that you can find some of the final trust layers down and then you are safe. However, this is not the case.

In general, there are only few ways to establish trust in the design layer, whether it is hardware or software.

â– ? You can get the design from the source you choose to trust

â– ? You can officially get a design from a trusted source

â– You can provide redundancy so that, for example, two different designs must be functionally agreed before they are executed, or one design actually tests the output of another design.

â– ? You can limit the scope of the function so that it does not actually affect anything that you want to protect.

Of course, once you trust a particular layer of design, you must establish trust at the bottom, in which it physically changes the design behavior—all the way down. It is necessary to examine this process in more detail, layer by layer.

Trusted application

Let's start with application software - because attackers may start here. Dino Dai Zovi, the director of mobile security at Square, said in a DAC panel discussion, “There are always places in 1999. Hackers do not need to enter your hardware. It is still very easy to get into your software.†In the following discussion - This is about car safety, said Craig Smith, a reverse engineering expert at Theia Labs. He said, “The system is not impeccable. However, today, this is too easy. Buffer overflow attacks have been successful in embedded systems. After all, this time is not enough.â€

Several factors come together to make the application very vulnerable. One is not paying attention. Administrators still attach great importance to scheduling and coding efficiency, without regard to security. Jeff Massimilla, general cyberspace cybersecurity officer at General Motors, reminded during the automotive seminar: "If security is not raised to the board level, we will not get the resources we need."

Moreover, accessibility is also a problem. Many embedded systems run continuously and cannot be accessed remotely after deployment in the field. You cannot perform offline software updates on substations or power plants. Massimilla noticed that it was also impossible to update the code of the moving car: "The car cannot be offline." So, when you find that there are loopholes in the deployed system, you may not be able to do anything.

Massimilla said another problem is scale. "We have about 30 to 40 electronic control units (ECUs) to ensure the safety of the new car." Delphi's CTO and executive VP Jeffrey Owens agreed. "The current car has 50 ECUs and more than 100 million lines of code. This is four times more complicated than the F-35 fighter." Such a large-scale code cannot use formal verification tools at the module level, nor can it achieve full redundancy. Automakers will not add ECUs just for safety. Therefore, it is very difficult to implement all methods of trust.

And size also means that most of the code will be reused. This makes it possible to establish trust in one module at a time. The car safety standard ISO 26262 provides a method for authenticating the code established in a trusted IP module.

ISO 26262 states that you can put components that meet certain strict criteria together and build compatible systems. For example, the developed components themselves meet the 26262 standard and include requirements for formal inspections, strict traceability, and trusted tools. Components that were developed before 26262 can also be accepted because they have been widely used in the field and have not failed. All of these components have been certified to be unrelated to the specific system environment they are designed for, and are therefore referred to as out-of-environment security elements (or SEooC if you prefer).

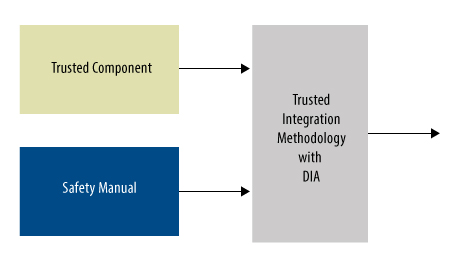

Because they are out of the surrounding environment, each SEooC must include a formal safety manual listing the requirements and assumptions of IP security use. The manual is mandatory for anyone who reuses SEooC in the 26262 environment. The requirements also apply to another document, the Development Interface Protocol (DIA)—Manual 26262 Compatible Design Multi-Party Contracting, in which multiple parties participate, defining who is playing what roles and what kind of responsibilities they assume throughout the process (Figures 2).

Figure 2 Integrating a trusted component requires secure operation and a trusted approach.

ISO 26262 originates from the automotive standard - requirements are more specific than the original industry standards such as IEC 61508, and are more stringent than most of the interim methods of embedded design. However, as more and more embedded designers face the actual cost of being attacked, the impact of 26262 extends to other application areas.

This is almost the only way to build trust in large-scale application software. The operating system and firmware are more complicated.

OS problem

Many problems encountered by operating systems and firmware are related to application code. But there are some obvious differences. The first is that many designs license the entire operating system -- or the entire development platform -- rather than writing their own. It is difficult to find a security method that is more SEooC compliant than a small real-time kernel program. This is followed by the fact that working software and firmware often need to be updated in the field. Recall how often you update Windows. Third, system developers generally regard the OS as a black box, do not know its code at all, how it works, and do not know the risks involved.

A major development trend of system software poses a real threat to security. Marilyn Wolf, chairman of Georgia Tech’s Embedded Computing Chair, reminded DAC attendees that “Open source is not a reliable method.†At a webinar unrelated to ISO 26262 SEooC, Mentor Graphics Chief Security Officer, Embedded Division Robert Bates pointed out, "The current open source software can never be SEooC. Basically, forget about Linux. Europe is trying to include the Linux version in the Automotive Safety Integrity Level (ASIL) B [26262, the second lowest rating in the assessment], but this It takes two to three years."

Experts suggest negotiating with customers to reduce the system's ASIL or isolate the OS so that it can physically affect only non-critical functions. For example, it is acceptable to use Linux on user interfaces that only display information, as long as this does not affect the control loop. Otherwise, other alternatives are to use an on-site, validated kernel program, develop an OS from SEooC or a similarly secure component, write a custom kernel program that conforms to the 26262 standard, or run the application on bare metal hardware. In a multi-core system, you can run different operating systems in two or three cores, using duplicate checks or voting methods, but you still need to carefully handle the situation in which the two OSs are attacked.

The update presents some new issues. Updates should achieve SEooC quality—that is, even if the team that created the update does not understand your system, you must also make sure that nothing in the update will make the system vulnerable. Before you replace any code in your system, make sure that the new code is from a valid source, and that what you receive is the same as the source. In general, this requires the use of a strong hash code to watermark the update, have the certificate of the authorized copyright owner, and encrypt it. Your system must receive updates, decrypt, and verify the signature and hash code before installation.

However, do you trust this decryption and inspection process? To do this, you must trust the software that does the work, as well as the hardware that executes the code, including, for example, a hardware crypto accelerator and an on-chip bus. This type of problem takes us from the border to the security hardware. In this environment, the concept is the same - a trusted source, but more demanding. Designing and updating hardware can be difficult and time consuming. The tool chain is more complex and requires multiple participation. Attacks can be physical or logical. Because it is difficult to attack hardware and costs are high, defenders do face the most skilled and patient opponents.

Protection hardware

Trusted hardware must start with a trusted specification: the documentation of requirements for all future units that can be traced back to design. You don't think the system is safe until you clearly separate what the system can and cannot do.

In principle, this form of demand means that formal equivalent inspections and declaration testing are allowed. However, in practice, the requirements are generally expressed ambiguously, without using the developer's native language, and sometimes referencing previous generation hardware that has never been tested for full features. In this type of project, the most important thing is that hardware developers and system developers communicate timely on all issues that arise - highlighting the importance of the 26262's DIA. You wouldn't want to launch an SoC with such pending questions as "How does a block move behave?" or " Under what conditions does the stored key be readable?".

From the agreed needs, the design can ideally pass successive abstraction levels, employ credible tools at each level, perform equivalence checks, and declare tests and inspections. This process must not only detect unintentional errors, but also detect deliberate sabotage. Yes, we are indeed in the realistic environment of sabotage. In the DAC security seminar, Lisa McIlrath of BBN Raytheon explained: “I know there are certain cases of persistent attacks on some people's Verilog because I did it. Some attacks are in the CPU core. In particular, instruction decode logic. You have to have trusted Verilog."

Tools also have the same problem, just like IP. Obviously, IP is suitable for SEooC discussion - but design tools? Synthesis, place and route, and even timing tools have the opportunity to modify the design. We are still in the real world: In his DAC keynote speech, Cadence President and CEO Lip-Bu Tan said, “Security is another requirement after meeting customer needs. We work actively to protect the security of our tools and IP. We know that our customers are very worried."

This type of security cannot be limited to one mode of operation. The device can be successfully attacked through debug and test modes. JTAG ports typically present a fair amount of data to consumers. There is a side channel attack: monitor the core supply current to detect the code execution flow, for example, as demonstrated in a DAC paper, inject errors into a text string to find the encryption key. Finally, there are physical attacks: lowering the supply voltage, forcing an exception to be available, or physically decomposing and reverse engineering the SoC.

In extreme cases, someone will take extreme measures. You can divide the design into two pieces—not understandable from each other, produced on different OEM lines, but assembled in a 3D module. You can use a misleading layout and fake or ambiguous features to disrupt the design. These methods seem very difficult and costly, but if other options are foreign forces controlling your air defense system, then this kind of approach is very reasonable. In fact, some serious researchers have suggested that they be used in very risky systems.

After all, these considerations involve filming. Then - do you really trust the owner of the foundry line who can access the design files, test files, and wafers? What about your assembly and test facility? They will all generate points of attack in your hardware, or Reverse engineer your design. If the global political or economic situation has changed dramatically, will you still trust them?

The most important protection

After knowing this, you are not trying to give up. From chip specification to application support, it is technically difficult to implement every stage of the design protection that is not economically viable. In security discussions, Typhone's CEO Siva Narendra agreed with this view: "It is impossible to build trust on every level." Fortunately, this is usually not necessary.

Dai Zovi noted that "the attackers are not only wicked people who will be foolhardy. They will care about different risks. They slowly scan, if they can not get the expected, they will not spend effort to attack.

For attackers, the cost of the attack is indeed different. You can go deep into many applications and operating systems through shareware tools, applicable PCs, and some tips. A truly continuous attack requires bringing together botnets from other unprotected systems, or putting together some graphics processing units (GPUs). Narendra made it clear to uneasy listeners: “A thousand graphics cards plus 9 hours are enough to break any 64-character password.†On the other hand, reverse engineering an SoC requires stealing a few chips, using value Million Dollar Ion Beam Tool and Electron Microscope.

The attacker who always hopes to catch soda water from a vending machine is not willing to invest so much. But a criminal gang may invest tens of thousands of dollars to effectively threaten the grid, and the power plant has to spend tens of millions of dollars to repair it. (Unfortunately, now they can attack more than this.) A country may invest in the construction of research laboratories to attack key systems of military or civilian facilities of potential enemies. In principle, you only need to protect the design from attacks and avoid economic losses.

However, for many embedded systems, it is difficult to estimate risks and returns. Are you worth keeping the kettle in your home from failing? Does the country's insurance company make hundreds of kettles unexploitable? Starting from a node to attack the entire network on a massive scale, attacking an insignificant amount of equipment will be far behind. Far more than the value of the device itself.

Despite this uncertainty, the best answer to the question at the beginning of this article may be the return on investment. When a rational attacker reaches a certain level and no longer invests more deeply, you can stop mining the root of trust at this level. For many systems, this means carefully writing applications, using the ARM-based TrustZone, etc. for the security update process, as well as very secure key storage. For transport-related or industrial systems that are related to life safety, and a country's energy and communications infrastructure, it means ISO 26262 or something similar. For the defense system, it means separating the design, disrupting the design, multiple domestic OEM lines, and formal mature code.

Outdoor Small Energy Storage System

Portable power supply humanized output port design: AC dual output port 220V output, to solve multi-channel power demand; DC 24V, 12V cigarette lighter, dual 5V USB output, more widely used. Can meet the needs of most electrical appliances, such as energy storage system for LED Light,energy storage system for outdoor, energy storage system for medical equipment,mobile phones, telephones, digital cameras, mobile hard drives, digital cameras, tablets, laptops, car starters, pumps, postal and telecommunications, environmental instruments Etc; can also be used in the following areas such as: finance, first aid, excavation, exploration, military, science, media, tourism, disaster relief, medical assistance, environmental protection and areas with widespread power shortages.

Solar Storage Battery,Small Solar System,Energy Storage System For Computer,Energy Storage System For Power Tools,Portable Small Electric Station,Multifunctional Lithium Generator

Shenzhen Enershare Technology Co.,Ltd , https://www.enersharepower.com