TensorFlow learning to build a neural network add layer

1. Create a Neural Network Layer

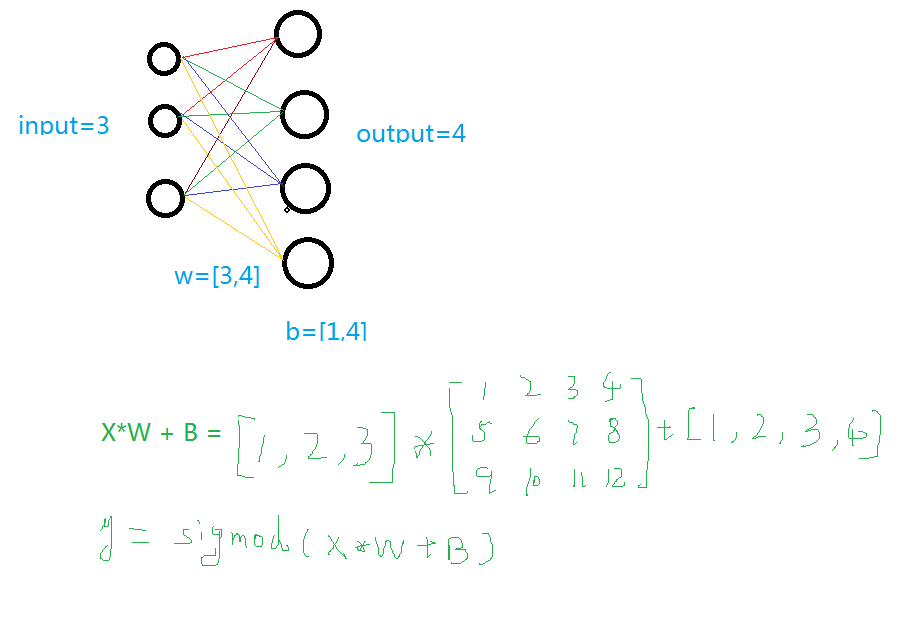

To build a neural network, we need to define a layer that takes an input, processes it using weights and biases, and applies an activation function. This is the basic building block of any neural network.

If you have studied neural networks before, you might be familiar with this concept. If not, feel free to check out my other blog for more details (http://).

The function add_layer() defines a new layer in the network. It accepts inputs, the number of input nodes, the number of output nodes, and an optional activation function.

Here’s how it works:

- Weights = tf.Variable(tf.random_normal([in_size, out_size])): Randomly initializes the weights.

- Baises = tf.Variable(tf.zeros([1, out_size]) + 0.1): Initializes the biases with small values to avoid zero initialization issues.

- y = tf.matmul(inputs, Weights) + Baises: Performs matrix multiplication and adds the bias.

- If an activation function is provided, it is applied to the output.

- The function returns the processed output.

2. Training a Quadratic Function

In this example, we train a simple neural network to approximate a quadratic function with some added noise.

We first generate sample data using NumPy. Then, we create a neural network with one hidden layer and one output layer.

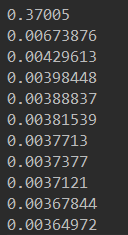

The training process uses gradient descent to minimize the mean squared error between the predicted and actual outputs.

After training, the model learns to predict the quadratic function accurately even in the presence of noise.

3. Dynamic Visualization of the Training Process

This section demonstrates how to dynamically visualize the training process using Matplotlib. As the model trains, we update the plot to show how the predictions evolve over time.

By removing the previous line and plotting the new prediction, we can see the model improving step by step.

This real-time feedback helps us understand how the network learns and adapts during training.

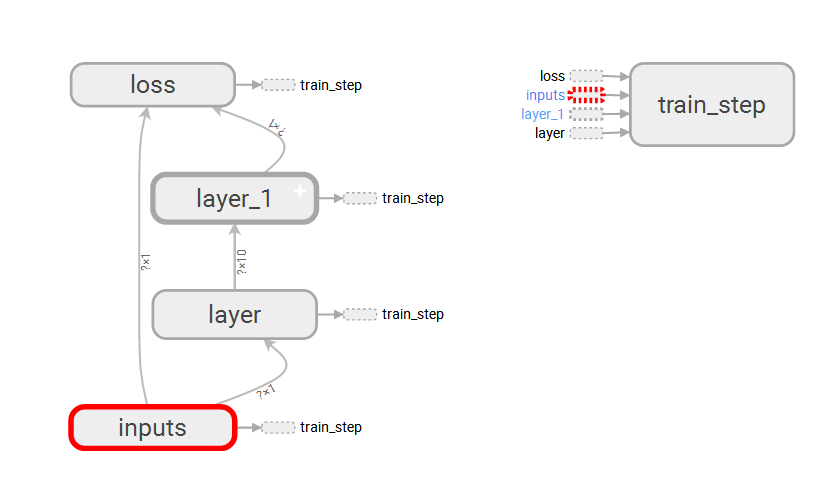

4. TensorBoard for Visualizing the Network Structure

TensorBoard is a powerful tool for visualizing the structure of your TensorFlow graph. It allows you to see how different layers and operations are connected.

You can use tf.name_scope() to group related operations and make the graph more readable. You can also name placeholders and variables for better clarity.

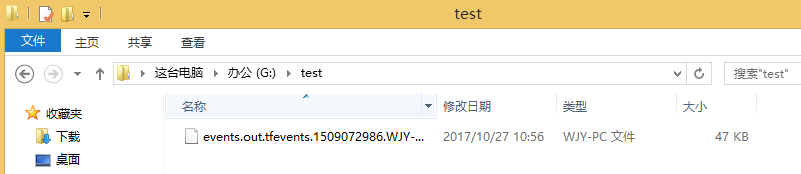

Once the graph is created, you can run the following command to launch TensorBoard:

tensorboard --logdir=test

Make sure to navigate to the correct directory where the log files are stored before running this command.

5. Using TensorBoard for Detailed Analysis

TensorBoard offers several features to help analyze the training process. For instance:

- tf.summary.histogram(): Displays the distribution of weights and biases in each layer.

- tf.summary.scalar(): Shows the loss over time, which is crucial for monitoring the training progress.

These summaries can be merged and written to a file using tf.summary.merge_all().

By adding these summaries to the TensorBoard log, you can gain deeper insights into how your model behaves during training.

Here is a complete code example that includes all the steps and visualizations described above.

Type C To Lightning Charger Cable, Apple Pd Fast Charger Cable, Pd Fast Charger Cable For Apple, Pd Fast Charger Cable For Iphone, C To Apple Cable

Dongguan Pinji Electronic Technology Limited , https://www.iquaxusb4cable.com